Engaging billions of consumers every day. Driving measurable business outcomes.

With Karix, enterprises harness AI-powered decisioning, seamless engagement, and trusted security—turning every customer interaction into impact.

Dominant Market Position

We command a robust 35% market share in the Indian CPaaS (Communication Platform-as-a-Service) industry. Globally, we rank among the top three publicly listed CPaaS companies.

Channel Leadership

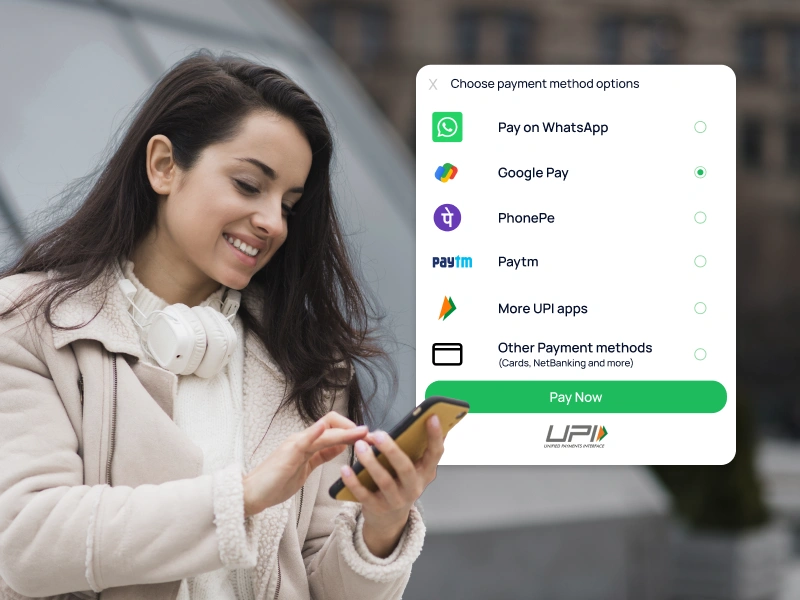

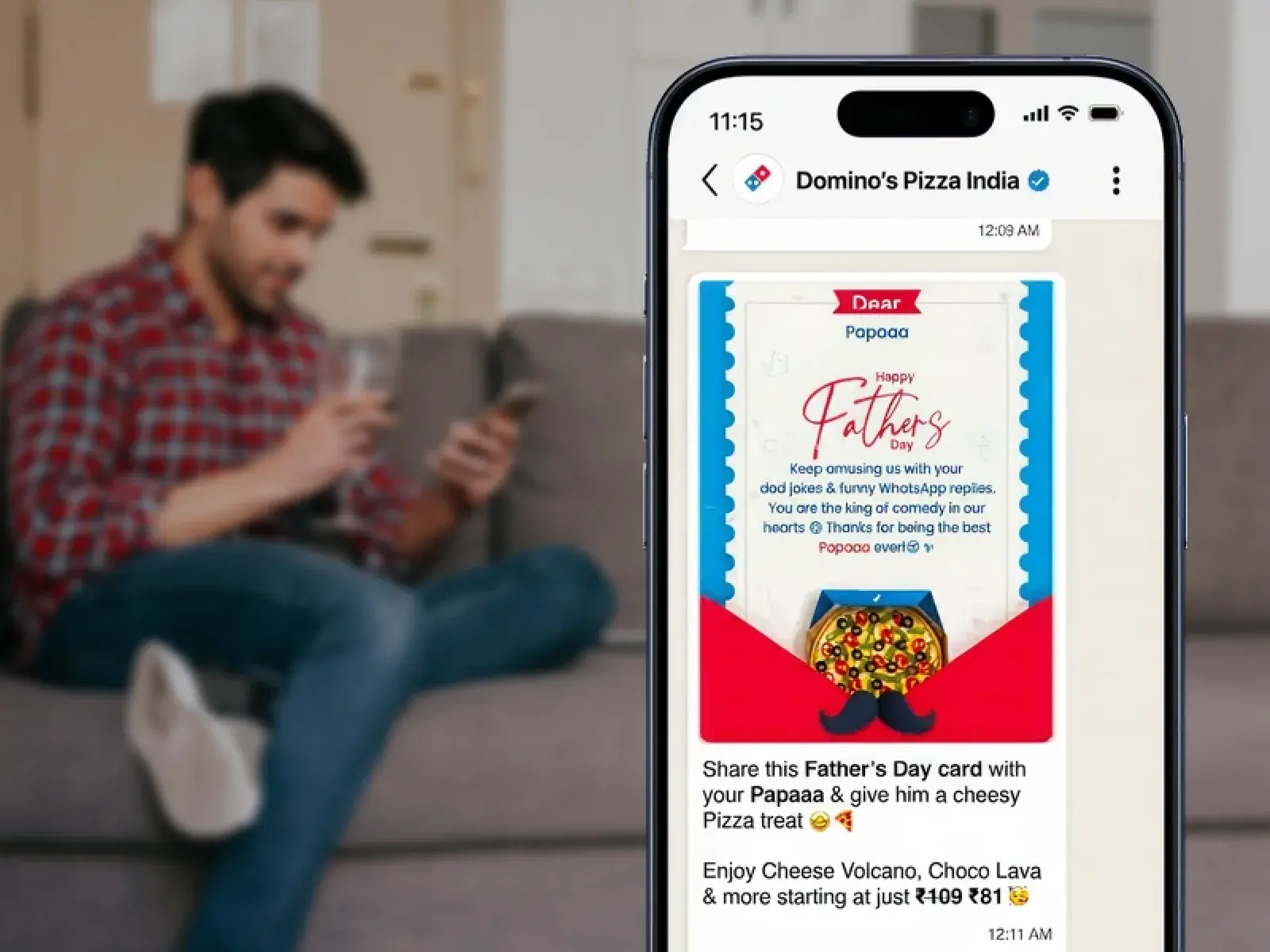

We bring to life omnichannel communications strategies with significant shares across key channels like WhatsApp, Google RCS, SMS, Truecaller (exclusive partnership), Voice, Email and many more.

The choice of leaders across sectors

With over 2,000 customers across various industries, we are trusted by over 70% of the top 100 enterprises in the market.

The only CPaaS partner you need

AI-powered engagement to deliver consistent business results

Every

AI-Powered journeys

AI for every

industry

Omnichannel

suite

The only CPaaS partner you need

AI-powered engagement to deliver consistent business results

Omnichannel suite

AI-Powered journeys

AI for every

industry

Omnichannel

suite

The only CPaaS platform you need

AI-powered engagement to deliver consistent business results

AI-Powered journeys

AI for every

industry

Omnichannel

suite

At enterprise scale, communication isn’t just about reach - it’s about business outcomes.

Karix gives you exclusive advantages that drive measurable growth, efficiency, trust, and innovation.

Lower total cost of ownership

We combine AI, strategy, and channel orchestration to reduce operational overhead and boost responsiveness, helping you do more with less.

15-20%

cost reduction savings

Leading E-commerce brand of India

Read Case Study >Enterprise-grade security, trusted engagement

With compliance, fraud prevention, and consent built in, Karix safeguards every customer interaction. The result: customers engage with confidence, and your brand builds lasting trust.

6Mn+

scam attacks identified & blocked

Largest bank of India

Read Case Study >Performance that scales

Whether acquisition or retention, Karix designs AI-powered customer journeys that improve conversions, reduce drop-offs, and strengthen loyalty.

2-3x

growth

Partnerships and recognitions

Meta Partner

of the Year

No. 1 Global

Partner

Exclusive Global

Partner

Tanla scores 80 on SP Global ESG Score 2025

Lower total cost of ownership

We combine AI, strategy, and channel orchestration to reduce operational overhead and boost responsiveness, helping you do more with less.

15-20%

cost reduction savings

Leading E-commerce brand of India

Read Case Study >Enterprise-grade security, trusted engagement

With compliance, fraud prevention, and consent built in, Karix safeguards every customer interaction. The result: customers engage with confidence, and your brand builds lasting trust.

6Mn+

scam attacks identified & blocked

Largest bank of India

Read Case Study >Performance that scales

Whether acquisition or retention, Karix designs AI-powered customer journeys that improve conversions, reduce drop-offs, and strengthen loyalty.

2-3x

growth

The right message, delivered by the right team

Empower every department to own the customer experience and contribute directly to revenue goals.

Marketing

Accelerate user acquisition and scale engagement with AI-powered conversations that nurture leads across the entire customer lifecycle.

Performance Marketing

Boost CLTV and maximise ROI through data-driven interactions and optimised conversion pathways across all key channels.

Product & Operations

Seamlessly drive user engagement and streamline critical transactional messaging throughout the entire customer journey.

Customer Support & CX

Resolve queries faster, reduce ticket volumes, and cut operational costs with intelligent, automated support solutions.

Let’s map the next chapter of your customer engagement

From mission-critical alerts to high-conversion campaigns, Karix is the partner of choice for enterprises, startups, and partners who need secure, scalable, and measurable communication.

.webp)